Surviving the AI Squeeze: Pricing, Power, and Platform Lock-In

A historic $1.8T wave of AI infrastructure—capped by OpenAI’s $300B Oracle deal—is reshaping cost structures, pricing dynamics and workforce transitions.

Less than two weeks after a high-profile White House tech summit on September 4, where Meta, Apple, Google, and Microsoft together pledged about $1.5 trillion in U.S. data center and chip investments, OpenAI stunned the industry with a $300 billion cloud deal with Oracle. This five-year agreement provides OpenAI with 4.5 gigawatts of computing capacity – roughly enough electricity to power four million homes. The headlines frame these moves as historic “infrastructure investments.” But for businesses relying on AI, these commitments foretell something else entirely: margin compression on an unprecedented scale.

To put the scale in perspective, $1.8 trillion is more than the inflation-adjusted cost of the entire U.S. Interstate Highway System. This isn’t just another tech announcement; it’s the starting gun for the biggest workforce and infrastructure overhaul since assembly lines replaced craftsmen. And as these trillions are poured into AI, companies’ profit-and-loss statements will feel the strain.

A Double-Edged Equation for Jobs and Costs

These massive commitments are creating a paradox that’s already hitting corporate budgets and workforce plans. On one hand, AI leaders are warning of radical productivity shifts that could eliminate huge swaths of white-collar jobs. Geoffrey Hinton – a pioneer often dubbed the “godfather of AI” – has warned that AI could create “massive unemployment,” making a few rich and most people poorer. And Dario Amodei, CEO of Anthropic, predicts AI could wipe out half of all entry-level white-collar jobs within five years, a potential “white-collar bloodbath” scenario. In plain terms, tasks once done by armies of analysts, accountants, and coordinators may be largely automated by 2030.

Yet at the same time, AI’s champions like Sam Altman of OpenAI promise that new efficiencies will drastically reduce costs. Altman projects that the cost “to use a given level of AI” will fall about 10× every 12 months, thanks to custom AI chips and cheap energy – effectively a 90% cost crash each year. He argues that advancements like specialized silicon and green energy will make AI vastly cheaper to run, offsetting today’s high prices.

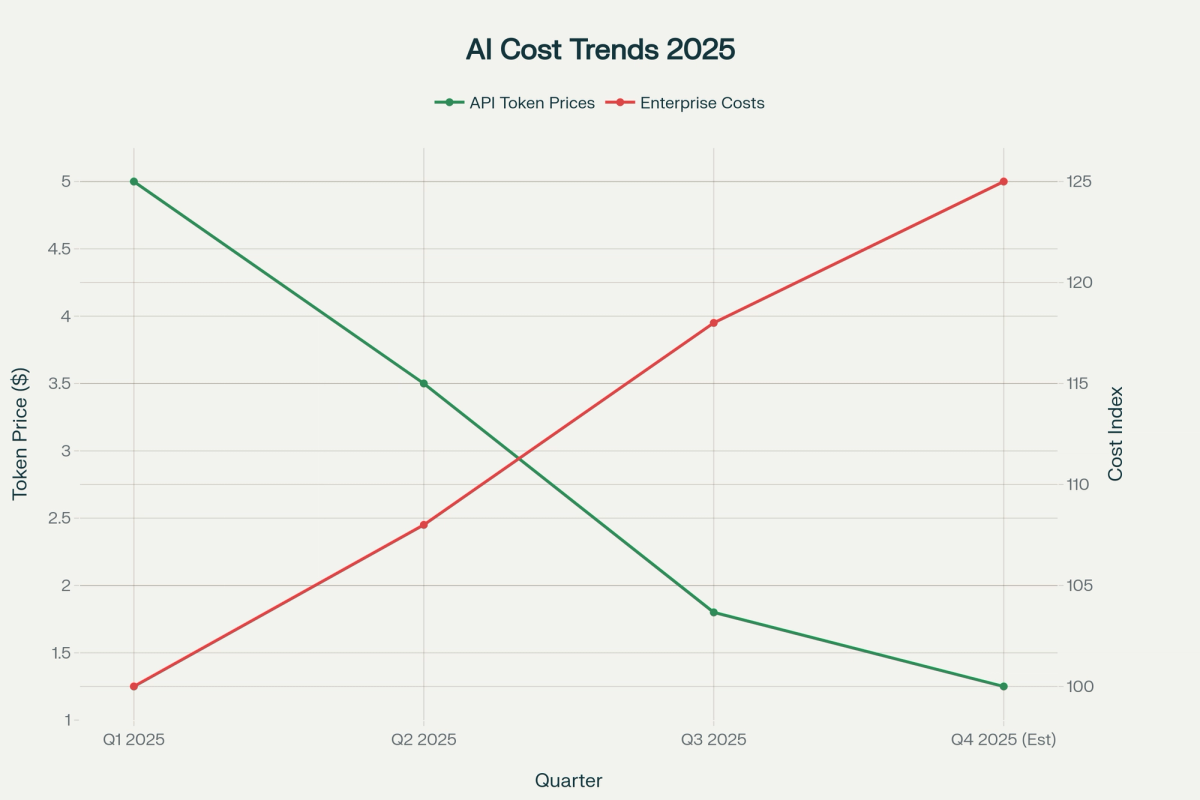

The reality so far? Corporate AI expenses are soaring, not shrinking. Despite price cuts for AI services, enterprise AI costs jumped sharply in the past year as usage exploded. For example, OpenAI’s latest GPT-5 model launched with API pricing roughly 75% lower per token (around $1.25 per million input tokens for the flagship model) than its predecessor – a seemingly deflationary move. But businesses promptly increased their usage by orders of magnitude, and vendors have added new fees for things like data handling and specialized computing. The result: many companies are seeing 30% or more growth in AI spending even as unit prices fall. The disconnect between Silicon Valley’s promised cost curves and the bills that CIOs are actually receiving has never been wider.

To understand why, follow the money. That $1.8T in investment will fund the construction of thousands of new data centers globally, which in turn creates a hiring boom in some sectors – but severe cuts in others. Building and outfitting these server farms could directly create on the order of 600,000 jobs (roughly, 300,000 in construction trades, 200,000 in chip and hardware manufacturing, and 100,000 in technical services to stand up and maintain the centers). It sounds like an employment windfall. But at the same time, entry-level office roles – the traditional first rungs on the corporate ladder – are being candidly targeted for elimination by AI. As one industry observer noted, many firms are now hiring AI software in place of entry-level analysts and coordinators, essentially burning the bottom of the org chart to fuel the top. The painful paradox is that AI is creating and destroying jobs simultaneously: for every data center electrician or chip factory technician needed, a back-office clerk or junior banker may be rendered redundant. (In fact, a 2024 New York Times report warned that incoming junior analyst roles on Wall Street could be among the first to go, displaced by AI models that can analyze loans or markets instantly.)

The Pricing Shell Game

A similar paradox is playing out with AI’s direct costs. Consider how AI services are priced and consumed today. In June, OpenAI dramatically slashed prices for its GPT-4 API, and by the time GPT-5 arrived, token costs were a fraction of what they had been a year prior. On paper, that looks like deflation. In practice, it became a pricing shell game. Cheap tokens led to explosive growth in usage – akin to a new addiction in the enterprise. AI queries became the new corporate cocaine: everyone’s using more, few are tracking the consumption, and the bill shock comes later. One bank’s CTO admitted their developers kept engineering ever-longer prompts (to boost accuracy), pushing monthly usage up 40% through sheer prompt drift. The steep discounts per unit hid a ballooning total spend.

The real damage is now showing up in product margins. Those billions of tokens companies are feeding into AI models? In some industries, the direct AI service fees are devouring 30–70% of the gross margin on AI-enhanced products, whereas traditional software licenses might have been only 3–7% of costs. In financial services, for instance, an AI-driven lending or wealth product might carry such high AI usage fees that only a sliver of revenue is left as profit. This isn’t just a new line item in the cost structure – it’s a fundamental margin restructuring. AI usage costs, if unmanaged, can flip a high-margin software product into a low-margin services-like model.

Worse, many of these costs are hidden in plain sight. Cloud vendors charge for things like data storage, model “optimization” and fine-tuning, and especially for moving data in and out of AI systems – often at premium rates. One healthcare company, for example, ran a small AI pilot expected to cost $50,000; with additional “infrastructure optimization” fees and data pipeline charges, it spiraled to $200,000 per month. As they ruefully noted, Oracle isn’t running a charity with that $300B deal – every ounce of compute or bandwidth is monetized. Token-based pricing, once lauded for its elegance, has proven to be a trap for many: usage can run away quickly, and few organizations have mature metering or governance to keep it in check.

We’re now seeing AI expenses pop up in departmental budgets the same way cloud shadow IT did a decade ago. Marketing might expense a ChatGPT subscription, sales signs up for an AI-driven CRM add-on, engineering pays for code-generation API calls, and so on. Each team’s spend looks small, but aggregated enterprise-wide it’s significant. The average large enterprise now employs dozens of different AI tools across various departments – one recent analysis found 254 distinct AI-powered apps in use at the typical company – each with its own billing model and often little central oversight. The result is “Shadow AI” spend that makes the old shadow IT problem look quaint. We’ve tracked cases where a company’s official AI budget might be $2.3 million, but the actual spend – buried in SaaS upcharges and cloud bills – was over $5.7 million. In other words, more than half of AI spending was flying under the radar, until finance eventually caught on. AI costs are becoming the new bandwidth or cloud storage: largely invisible until they’re consuming 30–40% of tech operating expenses, at which point it’s too late for an easy fix.

For most enterprises, this means a hard look in the mirror. The CIO or CFO reading about 75% price reductions should be asking: Is our total spend actually decreasing, or just our cost per unit? Thus far, the answer has been the latter.

Regional Arbitrage and the Energy Trap

All this AI infrastructure has to live somewhere, and location is everything when it comes to costs and risk. We are witnessing an AI data center land rush that is redrawing the map of tech hubs. States like Texas are emerging as big winners for hosting AI operations, thanks to a combination of relatively cheap power, business-friendly regulation, and ample land. Companies can build faster and run servers at lower cost per kilowatt in Texas than in, say, California or New York. It’s no coincidence that major cloud players and startups alike are siting huge AI computing farms in the Lone Star State. Meanwhile, Georgia and North Carolina are becoming the AI corridor of the U.S. Southeast – for example, Amazon has announced a $10 billion AI computing campus in North Carolina and a similar $11 billion investment in Georgia to expand AWS cloud and generative AI capacity. These regions boast a mix of affordable land, growing renewable energy assets, and state incentives.

California, by contrast, still holds the talent advantage – the top AI research labs and experts are largely in Silicon Valley and Seattle – but that edge comes at punishing cost. Power in California can be 2–3× more expensive than in energy-rich states, real estate and wages are high, and permitting for new facilities is slow. As a result, even California-based companies are expanding AI backends elsewhere (or relying on cloud regions in lower-cost states) to remain competitive. Overall, the gap between “AI hubs” and other areas is significant: all-in operating costs can differ by 25–30% depending on region, which is not a rounding error when you’re modeling a five-year infrastructure plan.

However, geography also creates an energy trap. Advanced AI operations consume staggering amounts of electricity – on the order of 10× the power of traditional compute workloads per server rack, according to experts. Training a single cutting-edge model can draw as much power as a small town, and running millions of AI queries (inference) continuously isn’t far behind. In Texas, where an outsized share of new data centers is being built, the electric grid is already straining. On hot summer days, spot power prices in Texas have spiked by 300% or more during peak hours. The ERCOT grid operator warned that peak electricity demand could double by 2031, largely due to AI and cloud data center growth. In practical terms, one severe heat wave or cold snap could send prices skyrocketing and even force rolling blackouts – a nightmare scenario if your AI platform needs to be online 24/7. A single afternoon of $9,000 per MWh electricity (hitting the price cap, as Texas saw in 2021) could blow an entire quarter’s profit forecast for an energy-intensive AI service.

The smart players see this coming. They are racing to lock in long-term energy contracts and invest in dedicated renewable projects to secure supply. Data center operators are signing 10–15 year power purchase agreements for wind, solar, and even exploring small-scale nuclear reactors to guarantee cheaper power. They know that as everyone else wakes up to AI’s appetite, the grid can’t handle what’s coming without massive investment – and that means higher prices for latecomers. Watching the utility companies’ capital expenditure plans has become a key leading indicator for AI costs: if your local power provider announces billions in grid upgrades or new plants (to support data centers), you can bet those costs will trickle through to your electricity rates over time.

In short, energy is the new bottleneck. In the era of cloud computing, bandwidth was the limiting factor; for AI, it’s kilowatts. No matter how advanced your AI models are, they are useless if you can’t run them affordably. The spread between energy-rich and energy-poor regions could define the next decade’s winners and losers in AI. Every major new AI model seems to require roughly 10× the compute (and energy) of its predecessor. By some estimates, GPT-6 will need infrastructure that doesn’t even exist yet – implying enormous new power and cooling demands. Guess who will pay to build that? Ultimately, the customers and enterprises using these models.

The Vendor Lock-In Nobody Wants to Admit

There’s another trap forming, less visible but just as perilous: AI platform lock-in. The AI cloud ecosystem today is consolidating around a few heavyweight alliances: OpenAI tightly intertwined with Microsoft Azure, Anthropic partnering with Amazon AWS, Google’s upcoming Gemini model tied to Google Cloud, and a handful of others. These aren’t open marketplaces – they are becoming digital roach motels for enterprise data and workflows. Once you commit to one of these AI stacks, switching out is incredibly costly. The models, the APIs, the fine-tunings, and the integrations all become deeply embedded in how your business operates.

One financial services firm learned this the hard way. They started out using OpenAI’s APIs and tools, but later tried to migrate some systems to Anthropic’s Claude (seeking better performance or pricing). After six months of effort and around $2 million spent on migration attempts, they gave up – the switch was too complex and expensive. Retraining models, rewriting integration code, reformatting data, and retraining staff on the new platform wiped out any projected savings. In fact, a broader assessment found that for a typical large enterprise deeply invested in one AI platform, a full migration could cost $8+ million and take over a year, including as much as $400,000 just in data egress fees to pull their own data out of the first cloud. Little surprise that the project was abandoned with the conclusion, “we’re stuck.”

This goes beyond the kind of vendor lock-in we knew in the past (like an Oracle database or an SAP ERP system). With AI, the dependency is multilayered: your data is formatted for a specific model, your prompts and queries are optimized for its quirks, your engineers are trained on its tools, and even your business processes may adapt to what that model can or cannot easily do. Shifting to another provider means rebuilding fundamental pieces of your intellectual property and workflow. It’s a one-way door. No wonder each AI provider is rushing to deepen these ties – it’s why OpenAI is spreading its workloads across multiple clouds and chip suppliers, spending lavishly to ensure enough capacity. The company has committed at least $19 billion on cloud compute deals outside of Microsoft – including a $11.9B contract with CoreWeave and a new partnership with Google Cloud – and $10 billion on developing custom AI chips with Broadcom. OpenAI isn’t diversifying its suppliers out of prudence; it’s desperately scouring for every GPU it can get, to avoid being bottlenecked by any single partner.

The meteoric rise of CoreWeave illustrates how scarcity translates to pricing power in this realm. Virtually unknown 18 months ago, CoreWeave positioned itself as an alternative cloud for GPU computing and, crucially, had inventory of NVIDIA AI chips when others didn’t. OpenAI and others flocked to them, and CoreWeave’s valuation surged (its stock jumped 270% above IPO price within months). The company’s value grew roughly 10× in a year, purely on the premise that it could deliver compute that was otherwise unavailable. Scarcity drives leverage. And as counter-intuitive as it sounds given all the new data centers being built, AI compute is actually getting scarcer in the near term. Demand is growing faster than supply of top-tier AI chips and power. This is why OpenAI is signing multi-billion deals with every major cloud (Oracle, Microsoft, Google) and pushing to develop its own chips – it’s hedging against being constrained.

For businesses, the implication is sobering. The more you weave AI into your operations, the more you may find your fate tied to a particular vendor’s ecosystem. The big platforms are offering tantalizing discounts and integration benefits to lure enterprises in – and indeed, large customers can negotiate 50%+ lower prices for commitments of volume. But once the honeymoon is over, those same customers face steep switching costs. Mid-sized firms, who lack bargaining power, end up paying premium rates and often overage fees when usage exceeds plan. They effectively subsidize the discounts given to the giants.

Workforce Transformation Woes

Amid all the focus on infrastructure, another cost is creeping up that many companies are underestimating: the workforce transformation required by AI. We’ve heard for years that automation will replace certain jobs and create new ones, and that overall productivity will rise. Historically, this kind of transition (think factory automation or the IT revolution) had a relatively gradual pace, allowing labor markets and organizations to adjust over decades. This time it’s different – the timeline is compressed dramatically. Changes that took the internet 20 years might hit in 5 years or less with AI. Companies still knee-deep in their “digital transformation” efforts risk fighting the last war; the AI upheaval is arriving faster than their playbooks anticipate.

One brutal truth is the “double cost” period that accompanies major AI deployments. In the short term, you often have to pay for both the new AI systems and the existing human workforce that AI is meant to augment or replace. During this handover period, productivity can crater. Employees need to be retrained to work alongside AI or in new roles; processes are in flux; errors might rise as people learn to trust or calibrate the AI outputs. In large AI rollouts we’ve observed, it’s common to see a 6–12 month drop in productivity by 30–40% in the affected functions, before improvements kick in. That’s a costly dip that few budgets model for. You’re essentially paying full payroll and new AI expenses while getting less output than before, until the kinks are ironed out. It’s the storm before the calm, and it kills margins in the interim (sometimes wiping out the gains the AI was supposed to deliver for a year or more).

Meanwhile, the labor market for AI talent is painfully tight. Machine learning engineers, AI prompt specialists, data scientists – they command hefty salaries, often 40–60% higher than comparable roles without AI skills. And that’s if you can even find them; demand far outstrips supply in many regions. Companies are having to pay a premium to hire or upskill people to lead their AI efforts, just as they look to trim costs elsewhere.

What about reskilling your existing staff? That’s the socially responsible approach and often the only way to fill certain skill gaps, but it’s expensive and uncertain. Studies have found that reskilling a displaced worker for a new role costs tens of thousands of dollars – around $24,000 on average per worker in one analysis. Even less intensive upskilling programs easily run into the five figures per employee when you factor in training materials, time off for learning, and lower productivity during training. Let’s say $10K–$50K as a range per person. On the other hand, laying off staff and hiring new specialists has its own costs: severance packages, recruiting fees, lost domain knowledge – which can range from $20K up to $100K per head for higher-level positions. By the raw numbers, it often appears cheaper to retrain an employee than to fire and hire anew. But that assumes the employee can indeed make the leap from, say, being an Excel power-user to an AI model supervisor or data curator. Many won’t make that transition successfully, and some roles simply won’t be needed in anything like their current form. The risk is investing in reskilling only to find that those workers still can’t reach the productivity of a true AI-era specialist, leaving you with tough choices down the line.

It’s a classic dilemma: there’s no easy way to “automate away” labor costs without incurring short-term pain. Leaders should be budgeting for overlap periods and generous training and change management initiatives. Few are. In corporate earnings calls and budgets, we rarely see line items for “productivity loss during AI implementation” or “retraining costs for 20% of workforce.” Those expenses are real and they’re coming. Companies that gloss over this will be blindsided when their margins take a sudden dip during an AI transition, or when their shiny new AI platform fails to deliver ROI because employees weren’t prepared to use it effectively.

“Shadow AI” – The New Wild West of IT

Compounding the workforce challenge is the explosion of unsanctioned AI usage at the ground level. In the early cloud era, CIOs struggled with “shadow IT” – business units adopting their own cloud apps or IT solutions without central approval. Now we have Shadow AI, and it’s Shadow IT on steroids. Practically every department is experimenting with AI tools, often outside of any official program. Marketing may be paying for a GPT-4 based copywriting service. Sales managers have subscribed to an AI sales prospecting tool. Developers quietly use GitHub Copilot (which is built on OpenAI) to write code. HR might be dabbling in an AI resume-screening service. And employees everywhere are plugging company data into free AI chatbots to get work done faster, sometimes ignoring internal guidelines.

The average enterprise today uses a bewildering array of AI-enabled applications – one security survey in 2025 found companies had an average 254 AI-powered apps in use across the business. Most of those weren’t deployed by IT officially. Each instance is a potential data risk (what if someone pastes sensitive client info into that AI tool?) and a cost risk (many of these tools start free but charge premium fees as usage grows). About 68% of employees who use AI admit they hide it from their bosses, and nearly half said they would defy any ban on AI tools at work. In other words, the genie's out of the bottle – employees find AI too useful to resist, even if it means bending rules.

This Wild West of AI adoption means hidden expenditures and risks. An enterprise might think it has, say, half a dozen official AI deployments, but in reality there are dozens of micro-pilots and tool subscriptions across teams. The earlier example of a company underestimating its AI spend by more than half was due to exactly this phenomenon – AI costs were embedded in SaaS “premium” editions, data cloud overages, and consulting fees labelled as “analytics enhancements.” Finance teams are now starting to develop “AI spend” audits to uncover these. They often find multiple departments unknowingly paying for similar AI services, or paying much higher retail rates than they would under an enterprise agreement. And on the risk side, there have been wake-up calls: employees pasting proprietary code or strategy documents into external AI tools, leading to data leaks. (One prominent case involved a startup’s staff using a trendy new AI chatbot, DeepSeek, which turned out to be based in China; it prompted a scramble in the Pentagon and other agencies to ban the app after discovering sensitive info was being sent to it.)

The bottom line is that nobody is fully in control of AI adoption at most organizations. CIOs and CTOs are sprinting to set policies (for example, forbidding use of public AI tools for confidential data, or creating internal AI sandboxes), but enforcement is tough. This mirrors the early days of cloud and BYOD (bring your own device) trends, but accelerated. Eventually, companies will likely consolidate and standardize on fewer AI platforms – but by then, they may have already incurred significant costs and risks from this free-for-all phase.

For now, business leaders should assume that actual AI usage is higher than reported, and ensure they have a way to monitor and manage it. It’s better to provide sanctioned, secure AI tools for employees (with enterprise agreements in place) than to pretend you can stop them from using AI and drive it underground.

Industry Reality Checks

How these dynamics play out varies by industry, but no sector is untouched. Here’s a brief look at four sectors undergoing particularly dramatic change:

1. Financial Services: Banks, insurers, and investment firms are leading the charge in AI adoption – and feeling the pain early. Virtually every bank is now building AI models for lending decisions, trading, fraud detection, and customer service. The efficiency gains are real: loan applications that used to take days of human review can be auto-processed in minutes, with humans only handling the tricky exceptions. But the human toll is also real: whole teams of underwriters and junior analysts are being pared down. As Dario Amodei warned, those classic entry-level office jobs are disappearing. One major bank recently announced a new AI-driven loan platform and simultaneously quietly offered buyouts to dozens of credit analysts – the AI was effectively their replacement.

From a cost perspective, finance firms are discovering the token-cost trap we described. AI usage fees are eating into product margins at an unsustainable rate. Early pilot projects might have been written off as R&D, but now that AI features are being rolled into customer offerings, the ongoing costs are hitting the P&L. A bank that offers an AI-enhanced wealth management tool, for example, might spend a small fortune on GPT-4/5 queries to generate personalized analyses for clients. Analysts at a16z noted that for some enterprise SaaS products using GPT-4, the token processing costs alone could equal 30–70% of gross margin. Finance is seeing exactly that: what used to be a high-margin software add-on becomes a low-margin service once you factor in the OpenAI or Anthropic API bills. This is quickly becoming “the new normal” in financial IT spend – not a one-off pilot cost, but an ongoing operational expense that needs to be priced into products. Financial institutions are now negotiating bulk usage agreements with AI vendors or investing in proprietary models to try to claw back some control over these costs.

2. Healthcare: Healthcare stands to benefit enormously from AI – and it knows it. AI models are already demonstrating diagnostic accuracy that can match or exceed radiologists for certain tasks (for example, in detecting cancers on images). And healthcare’s administrative overhead, which infamously accounts for roughly 30% of U.S. healthcare costs, is a ripe target for AI automation. If AI could streamline billing, coding, appointment scheduling, and basic patient inquiries, that overhead could perhaps drop to 10%. In theory, that’s fantastic for healthcare providers’ future margins (and patient bills). But in the short term, it means a massive workforce dislocation. Thousands of medical billers, schedulers, insurance claims processors, and even some clinical staff might be made redundant or redeployed. We’re talking about people who occupy entire floors in hospital office buildings today.

During this transition, healthcare organizations face the double-cost dilemma acutely. They must continue to pay staff while also investing heavily in AI systems and dealing with the regulatory and safety burdens unique to health tech. Productivity in the transition may actually drop initially, as clinicians and administrators adjust to new AI-driven workflows (with lots of skepticism to overcome). There’s also a trust factor – doctors need to be convinced to rely on AI second opinions, and patients need to trust AI involvement in their care. This can slow down adoption and prolong the period of inefficiency.

On the cost side, healthcare firms that dipped toes in AI are encountering the same cost overrun issues. Recall the example of a pilot project projected at $50K a month ballooning to $200K – that was a real case at a hospital network optimizing its supply chain with AI. The extra “infrastructure fees” came from needing more cloud capacity, higher-than-expected data storage for medical records, and so on. Healthcare data is huge and heavily regulated, which means you often can’t use the cheapest options for storage or compute. So the “AI tax” – the extra cost of doing AI in a compliant way – is high. Nevertheless, the pressure to deploy AI is intense, because competitors are doing it and because in the long run it could save lives and money. Healthcare CEOs are walking a tightrope between short-term cost pain and long-term payoff. They should brace for some brutal quarters financially during the ramp-up. As one hospital CFO put it, “Our AI told us how to save $10 million a year… but it’s costing us $15 million this year to implement it.”

3. Professional Services (Consulting, Advisory, Law): It’s an irony not lost on anyone that management consultants and IT advisors are some of the loudest proponents of “AI transformation” for their clients, yet their own industry is directly in the crosshairs of automation. Big consulting firms have tens of thousands of bright analysts – the kind who build slide decks, financial models, and market research reports. Those are precisely the tasks generative AI and analytics automation can perform at lightning speed. Already, forward-looking consultancies are using AI internally to draft reports, analyze client data, and even generate strategic recommendations (with human oversight). This will likely allow them to do the same work with fewer junior staff. It’s telling that in earnings calls, some major firms have hinted at slowing their MBA recruitment in coming years, expecting efficiency improvements from technology.

However, in the immediate term, consultancies are mostly adding AI as a value-add rather than replacing billable staff. They face a competitive imperative: if Firm A can do a project in 4 weeks with AI assistance and Firm B takes 6 weeks manually, Firm A wins – unless Firm B also augments with AI. So everyone is moving quickly to arm their teams with the best tools. The mid-term effect will likely be fewer entry-level positions and a need for more AI-fluent consultants (which schools are now scrambling to produce). Another wrinkle: large enterprise clients are demanding steep discounts on AI-heavy consulting projects, because they know once the upfront work is done, the marginal cost of running models is low. We hear of Fortune 100 companies negotiating 50–70% discounts on big multi-year AI transformation contracts, leveraging their volume and data access. Meanwhile, smaller and mid-market clients – who don’t have such clout – are still paying higher rates (and often getting hit with overage fees if a consultant’s AI solution calls far more API queries than expected). This bifurcation could squeeze mid-sized consultancies hardest: they pay retail for AI tools and can’t command premium prices from savvy clients, pinching their margins tightly.

4. Manufacturing and Chipmakers: Manufacturers initially saw the AI boom as a boon – after all, someone has to make all these AI chips, servers, and gadgets. And indeed, companies like Nvidia (chips), TSMC (semiconductor manufacturing), and others have profited immensely. But the reality is that only a few firms globally have the capability to produce advanced AI hardware at scale. Essentially, the big three are: TSMC in Taiwan and Samsung in South Korea (for cutting-edge chip fabrication), and to a lesser extent Intel (which is trying to catch up in process tech). Many other players who thought they could ride the AI hardware wave – smaller chip startups, contract manufacturers, component suppliers – are fighting over scraps or struggling with supply chain bottlenecks. The high capital costs and technical barriers mean the spoils are very concentrated.

Moreover, manufacturing as a sector will face the same AI automation wave in its processes. Once all this new infrastructure is built, the operational AI will be turned inward to optimize factories, supply chains, and logistics. The same AI that needed new factories will then be used to run those factories with half the clerical staff. Foxconn, for instance, is investing in AI to manage workflows with fewer human supervisors; automotive plants are eyeing AI for predictive maintenance and inventory management, reducing the need for schedulers and quality control personnel. So manufacturing companies could find that after the current surge in demand (building AI hardware), they enter a period where their own back-office and even engineering roles are aggressively streamlined by AI. The net outcome could be fewer total jobs in manufacturing despite higher productivity and output.

In short, every industry must contend with AI’s double-edged sword: new capabilities and efficiencies, but also new costs, new dependencies, and workforce upheaval. No one gets a free pass.

The China Factor That Few Acknowledge

While American companies scramble against each other in the AI arms race – competing for talent, compute, and market share – China is playing a different game entirely. Beijing is executing a state-directed AI strategy on a scale that rivals the U.S. private sector’s efforts dollar-for-dollar. The Chinese government has outlined plans to invest roughly ¥10 trillion (about $1.4 trillion) in advanced technologies, including AI, over a 15-year span. This isn’t venture capital seeking profits or companies chasing quarterly targets – it’s a coordinated national agenda to achieve global tech dominance by 2030.

What does that mean in practice? It means China is pouring money into AI education (they produced 3.5 million STEM graduates in 2020 alone), research (4,500+ AI firms across the country), and infrastructure (from nationwide cloud networks to AI in manufacturing). They are not as constrained by short-term ROI concerns or by fears of displacing workers – the state can cushion and redirect labor as needed. If AI displaces jobs, the government can implement policies to retrain or support those workers in the short run. The strategic view is long-term: whoever leads in AI by 2030 could shape the global economic and security landscape for decades.

Meanwhile, the European Union is charting its own path, one that prioritizes regulation and ethical oversight (through initiatives like the EU AI Act) even if that slows AI deployment. The EU focus is on privacy, safety, and controlling AI’s impact, arguably at the expense of speed and perhaps capability. This could put European companies at a disadvantage if the rest of the world moves faster, but the EU is betting on a future where trustworthy AI is a competitive differentiator.

For U.S. firms, the takeaway is that treating AI as purely a domestic free-market competition misses the bigger picture. America’s tech giants and startups are collectively spending those ~$1.8T, which is tremendous, but they’re not coordinated with each other. They might even be duplicating efforts. By contrast, China’s ~$1.4T state plan (almost matching the scale) is orchestrated – investments complement each other, and the government is ensuring critical gaps (like domestic chip production due to export bans) are addressed. One example: facing U.S. export controls on top-tier chips, China has redirected efforts to develop AI algorithms that can perform decently on less advanced chips, and to accelerate indigenous chip-making for AI.

If American companies continue in an every-company-for-itself mode, they could unwittingly cede the AI leadership to a coordinated state actor. The long game isn’t just who makes the best model next year, but who controls the ecosystem and reaps the majority of economic benefits by 2030. For now, U.S. firms also have to consider that a significant portion of global AI GDP impact – an estimated 70% – will likely accrue to China and North America combined. It’s essentially a two-horse race at a national level, even as dozens of players compete in the U.S. market. American business leaders might need to think beyond quarterly earnings and consider collective outcomes: innovation is great, but if everyone is building on proprietary standards and cloud silos, the fragmentation could weaken the whole.

The global context should impart urgency. The ~$15 trillion economic boost AI is projected to add to the world economy by 2030 will not be divided equally. If the U.S. wants a major share (and to set the rules), its approach to AI investment and infrastructure may need more cohesion – perhaps more public-private partnership or at least industry consortia to tackle common challenges (standards, safety, workforce retraining at scale). Otherwise, the current approach – each company racing to lock in its own territory – might hand the game to those playing with a national playbook.

The 2026 Scenario: High Costs vs. Low Costs – Either Way, Disruption

Let’s fast-forward 18–24 months. By 2026, many analysts expect we’ll either be on the verge of artificial general intelligence (AGI) – a system with human-level cognitive ability – or at least something very close. OpenAI’s CEO Sam Altman has even hinted that AGI is possible within that timeframe. Whether or not one buys that specific prediction, it’s clear the models will be far more powerful than today and far more ubiquitous.

Now imagine what happens in each of two broad scenarios:

- Scenario A: AI demand outpaces supply (High Costs Spike). In this scenario, everyone from Fortune 500 companies to small businesses suddenly wants access to the most advanced AI (say GPT-6 or GPT-7-level systems) because the capabilities are game-changing. Compute capacity is still catching up, so usage is effectively rationed by price. The cost of top-tier AI goes through the roof – 10× higher than today – due to scarcity. Only the richest firms can fully utilize it, and even they see major margin hits. This is somewhat like the early days of any new tech, but magnified: if AGI emerges, the rush to deploy it could make 2023’s GPU shortages look trivial. Under this scenario, any company whose strategy relies on cheap AI would be in trouble. High costs would directly compress margins for AI-dependent services (if you can’t raise prices accordingly), and some companies might be priced out of using the best AI altogether, losing competitive edge.

- Scenario B: AI supply outpaces demand (Low Costs Crash). In this alternate scenario, Altman’s vision materializes: custom AI chips, abundant GPUs, and perhaps new energy sources (even talk of dedicated nuclear reactors for data centers) come online by 2026. This floods the market with cheap compute. The cost to run advanced AI plummets by 90% or more. What happens then? Well, suddenly the technology is accessible to all – even startups and smaller firms can afford near-AGI level services. This sounds great, but it commoditizes a lot of what companies may have built over the past few years. If everyone can use the same super-AI at negligible cost, then simply having that AI doesn’t confer an advantage. Differentiation shifts to data, branding, and integration – things incumbents might have, but also things an agile newcomer could exploit. Low costs would also undermine business models that were based on charging for AI usage. If the price of AI drops tenfold, and you were reselling AI-powered services, your revenue could crater unless you find new value to add.

The uncomfortable truth is both scenarios are highly disruptive. In Scenario A (high-cost), many AI initiatives could become unprofitable or only viable for a select few – it “kills margins directly” by making input costs exorbitant. In Scenario B (low-cost), the value of AI gets baked into the baseline expectations and it’s hard to charge a premium for it – it kills margins indirectly by intensifying competition and eroding pricing power. Think of how internet bandwidth went from expensive to cheap: good for consumers, but ISPs and telcos saw their services commoditized, and only those who scaled massively or layered other services survived.

The likely reality will lie somewhere between these extremes, or oscillate: perhaps a short spike then a drop. But companies must be prepared for both. The firms that will survive and thrive in 2026 are not betting solely on “AI will always be scarce and expensive” or “AI will soon be abundant and cheap” – they’re hedging for either outcome. They are building flexibility into their models: focusing on proprietary data advantages (so they remain competitive even if the raw AI is cheap), and also investing in efficiency and vertical integration (so they can weather a period of high costs if needed). They’re also thinking about new revenue streams enabled by AI (to capture the upside of ubiquity) while keeping a close eye on costs (to ride out scarcity).

As an analogy, consider cloud computing: early on, compute was expensive and only big players benefited; later it became cheap and everyone had it, shifting the value elsewhere. AI could follow a similar trajectory but on fast-forward.

Three Uncomfortable Truths

Stepping back from the day-to-day chaos, a few hard truths emerge for business leaders:

First: The workforce disruption from AI will happen faster than any prior tech shift. We’re looking at a five-year horizon for changes that the internet took two decades to enact. Companies that are still slogging through “digitization” or hesitant about automation are going to be caught flat-footed. The overlap period of paying for both humans and AI (the double cost phase) is real, and it’s a margin killer that few financial models are accounting for. Assume you’ll have a year or more where you’re essentially paying twice for the same output – once to people and once to machines – while you transition, and plan for that. If AI does indeed eliminate or redefine half of white-collar jobs by 2030 (as experts like Hinton and Amodei warn), the social and organizational whiplash will be tremendous. Productivity will eventually surge, but many companies won’t survive the interim turmoil unless they manage it proactively (retraining, reallocating, and frankly, resizing where necessary).

Second: Energy infrastructure is the new strategic bottleneck for the AI era. This is no longer an abstract ESG talking point; it’s a core operational issue. Affordable, reliable electricity is now as critical as bandwidth was to the internet. Regions with cheap, plentiful power (and the political will to rapidly permit new projects) will have a huge advantage in attracting AI business. Those stuck in high-cost energy markets will face much higher operational costs or limits on growth. Every major advance in AI capability comes with exponentially greater power demands – it’s not linear. If GPT-4 consumed X power, GPT-5 might need 3X, and a potential GPT-6 could need 10X or more. Our grids and data center infrastructure are straining to keep up. The companies that invest now in energy solutions – whether it’s long-term renewable contracts, their own solar/wind farms, backup generators, or even experimental options like small modular nuclear reactors – will be better insulated from volatility. In essence, there’s no AI without electricity. Leaders should be asking not just “Do we have the compute?” but “Do we have the power (and cooling and distribution) to use that compute at scale, at a cost that makes sense?”

Third: Market concentration and ecosystem control are accelerating. Despite the rhetoric of democratization, we’re seeing the big tech firms tighten their grip. The “Big Four” (or five) aren’t simply investing in AI themselves; they’re building entire ecosystems and supply chains that ensure a lion’s share of value stays with them. They invest in chips (NVIDIA, custom silicon), they dominate cloud platforms, they develop foundation models, and they acquire or fund promising startups to fold into their empires. If you’re a supplier or partner, you either align with one of these ecosystems perfectly or risk being locked out. For mid-sized tech companies or enterprises, this means you may have to choose a horse and ride it – aligning with Azure/OpenAI, or AWS/Anthropic, or Google/Gemini, for instance – because being caught in between could leave you unsupported. It also means scale is more important than ever. Smaller players with a great idea might quickly get bought or pushed out by a platform’s competing feature. The old idea that tech cycles start fragmented and then consolidate is playing out at hyperspeed in AI. Already, just a handful of companies account for the majority of AI research advancements and AI computing power deployed. The combined capital expenditures of Amazon, Google, Meta, and Microsoft are set to exceed $320 billion this year, much of that on AI and cloud. That kind of spending erects huge barriers to new entrants. Unless you have a very defensible niche or access to unique data or community, you’ll have to either “scale up fast or partner up (or sell)”. This concentrated power also raises regulatory and national security questions, but those might be slow to resolve. In the meantime, the rich get richer: big platforms will capture outsized value from the AI boom, and many smaller software companies could find their margins squeezed to the breaking point (because the platform takes a cut at every layer – cloud, model API, app store, etc.).

Put simply, the $1.8 trillion AI investment isn’t just about tech – it’s about economic reorganization at breakneck speed. We are watching AI reshape industry structures, job markets, cost models, and geopolitical balances all at once.

OpenAI’s massive infrastructure splurge (and similar moves by its peers) is effectively billing the future to the present. They are spending huge sums now to build what they assume will be the foundational infrastructure of tomorrow’s economy. That bill comes due regardless of whether AI meets every ambitious goal by 2030. Companies that recognize both the opportunity and the cost restructuring that AI brings stand a chance of navigating this upheaval. They will treat AI not as just another IT project or shiny tool, but as a fundamental rewiring of how they create value (and for whom).

Those companies, on the other hand, that treat AI simply as a new software to buy or a line-item expense to manage are in for a rude awakening. They may be inadvertently writing their own acquisition or obsolescence story. In the gap between AI’s projected $15 trillion boost to global GDP by 2030 and the 50% of jobs at risk in the same timeframe lies the strategic battlefield where fortunes will be made and lost.

In summary, these infrastructure investments and AI deals are not really about technology – they’re about market control and survival. When OpenAI teams with Oracle for compute, it’s not a simple vendor contract; it’s a maneuver in a high-stakes game of control. Oracle becomes the indispensable supplier (the “dealer”), and OpenAI positions itself as both a voracious consumer and a standard-setter (both player and house in the casino). Every other business is left vying for access on their terms – essentially buying chips to sit at a table that the big players own. To thrive, businesses will need to play smarter: hedge their bets, form the right alliances, invest in resilience, and above all, prepare for an AI-driven economy that will look very different from today’s. The future is being built now, and the invoice for it is already in the mail.

References

- White House tech CEO summit investment pledges

- OpenAI’s $300B Oracle cloud deal and power capacity

- Expert warnings on AI job impacts (Geoffrey Hinton, Dario Amodei)

- Sam Altman on AI cost declines (10× cost reduction per year)

- Enterprise AI spending and cost pressures

- OpenAI GPT-5 API pricing vs. prior models

- Token costs consuming product margins

- Shadow AI prevalence in enterprises

- Texas as an AI data center hub (cheap power, growth)

- Energy strain from AI (power usage 10×, grid stress)

- AI platform migration costs and lock-in issues

- OpenAI multi-cloud and chip investments

- CoreWeave valuation surge with GPU supply

- Wage premium for AI-skilled workers

- Reskilling costs vs. redundancy costs

- Fortune/NYT on AI replacing junior analysts in finance

- AI costs in finance (Andreessen data on margins)

- China’s state-led $1.4T AI strategy

- Projected $15.7T economic impact of AI by 2030